You’d simplify BPMN work and at the same time make resulting diagrams simpler and clearer by accepting the following assumptions:

- All information is stored

- Organization has a mechanism of tasks assignation and transfer

- Every task is accompanied by appropriate instruction

- Every task has standard duration and there is a way to control it

1. All information is stored

Don’t ask how and where process data (attributes) are stored. Just take for granted that there is some dedicated storage and you are able to handle it.

E.g. you may have a RDBMS and you know how to design data structures - your engineers are familiar with the 3rd normal form, Entity-Relations diagrams, SQL etc.

Of course we won’t abandon the data design completely and will need to set it up sooner or later. But later is better because whenever there is an opportunity to divide a complex task into two relatively independent - process and data modeling in this case - one should utilize it because it simplifies things considerably.

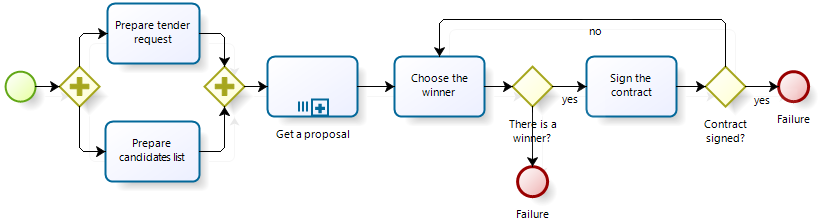

Example: Tender process –

Question: What data are used to choose the winner?

Answer: There are proposals received from candidates (and probably their ratings) resulting from “Get a proposal” subprocess.

It’s enough - we can do the process design in assumption that proposals received from candidates are stored somewhere and are available for the following process tasks. It doesn’t really matter exactly how they are stored - in paper, Excel sheets obtained by email, XML documents obtained through e-commerce or as a relational table.

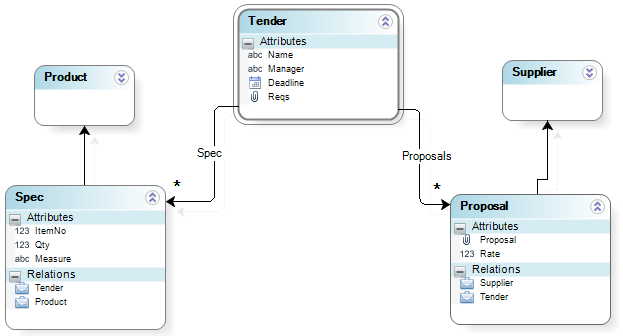

Now if we are going to not just model the process but also to automate then we’ll have to work on the data scheme as soon as the process scheme is ready. For example, the E-R diagram of the tender process data may look like this:

Later when the process will be modified, the data model will be amended as well. But don’t try combine these two aspects in one diagram - there are proven instruments for data modeling and BPMN is for the process part.

Although there are data objects and data stores in BPMN, they are a kind of “second-class citizens”: the control flows are obligatory in BPMN while the data flows are optional.

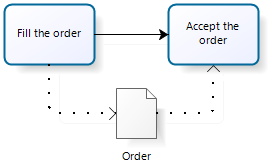

It sounds unusual for certain analysts and may even cause rejection. The background in other notations may form a habit to use data objects and data flows abundantly like in the following example:

Does the data object “Order” helps better understanding? I’d say no: if one task is named “Fill the order” and the other “Accept the order” then we may rightfully assume that the process has an “Order” attribute.

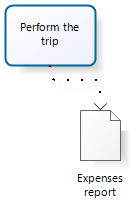

This is why I rarely use the data objects - only when they are non-obvious yet essential to understand the logic. As an example, it makes sense to depict the “Expenses report” attached to the process task “Perform the trip”:

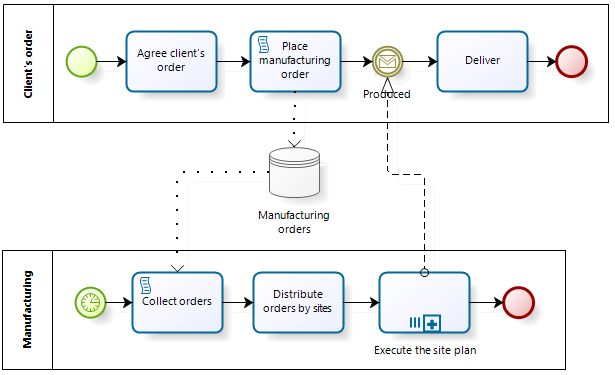

Inter-process collaboration is a special case. Unlike in orchestration, the data flows are essential for process logic understanding here:

More on the matter:

To be continued…

In regards to your comment “All information is stored” - I have a small disagreement with it.

True, until not long ago that was the case, but then social happened. and with it, the deluge of data and noise that brought with it, and the impossibility to both store it all (it would never get used and is a total waste) and process it in real-time (thus the birth of “Big Data” or i should say rediscovery).

The reason i am bringing this is because we are starting the path where no data will be stored (or very little actually) as the internet of things, big data, and real-time processing become more and more utilized.

everything is stored today, probably - but a large amount of it is useless, and most of what we will use in the (very) near future won’t be stored.

sorry, time to change your assumptions…

Esteban

Esteban, there is a lot of unstructured or poorly structured data around us. But to bring an appropriate result of a business process, the data within it must become structured to the needed degree at some point. This may happen right along with the data input at the entry of the process, or within this very process. But if you do not manage to conquer the chaos of data within the process, you can not guarantee the quality of the process itself and of the result the process is intended for. Thus, as I get it, Anatoly said, that once you have obtained some structured data either from outside or by working over some unstructured data gained from the outside, it is implied that you alway have room where to store the result in the context of the process’s instance, so that it could be used later by the process. Also, you might say that sometimes one has to make decisions based on fussy logic, that is, on come uncertain data. I say: yes, but this case relates more to expert systems natively based on fussy logic. Deem them as “business rules management” layer for you BPM system, and the latter then obtains some structured decision for forking the workflow.