Cross-functional is a process involving several upper-level departments (or “functions”). From a process methodology perspective a BPM initiative should ultimately aim on such processes because handoffs between departments is usually the biggest source of problems and hence the greatest potential for improvement. Departmens use to rate their internal targets above the targets of the business as a whole as soon as hierarchical organizations reach certain size limit.

This idea ain’t new: “breaking down the walls between departments” is the re-engineering call of the early 90’s. An implementation proposed at that time - single radical transformation - wasn’t quite successful but it’s another story. Modern BPM got new ideas about how to reach it but the goal remains the same.

The «functional silo» metaphor is commonly used to illustrate the cross-functional problems. The analogy is following: after a hay silo is mounted one can only get a small portion of that wealth - the upper layer. Likewise, resources, information, knowledge and procedures in hierarchical companies are buried in the functional units - much of these asstes are not available to consumers from other areas and does not contribute effectively to the goals of the company as a whole.

A functional unit tends to come to the wrong view of what is “our business” and what’s not. For example it’s natural for accounting/finance to assume that accounting and reporting is their main business while invoicing is really someone else’s (e.g. sales) and for accounting core activities it’s a nuisance. Yet from a business standpoint the opposite is true: billing is part of the “Order to Cash” business process, most important in terms of value for the customer while accounting and reporting are auxiliary activities. We can’t avoid it because of the governement requirements and our own planning needs yet it does not create value and hence its cost should be minimized.

Accounting is just one example. New product development, building a commercial proposal, customer order fulfillment - there are lot of things critical to the client and hence for the business that can’t be assigned to a single business unit.

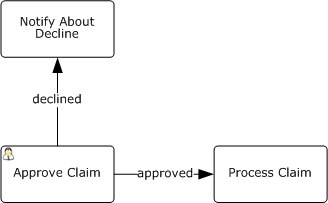

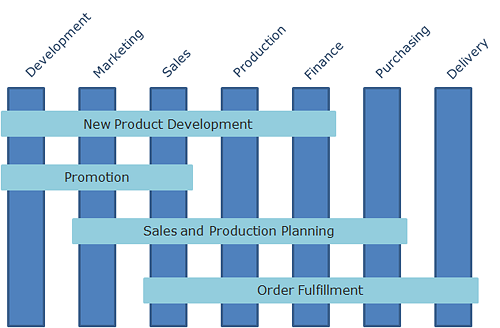

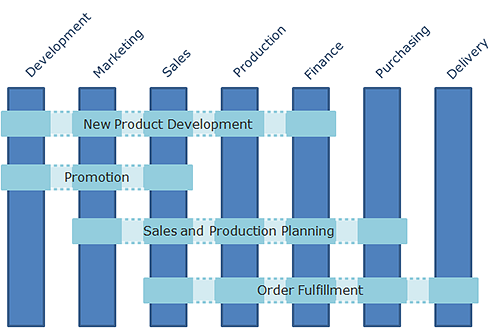

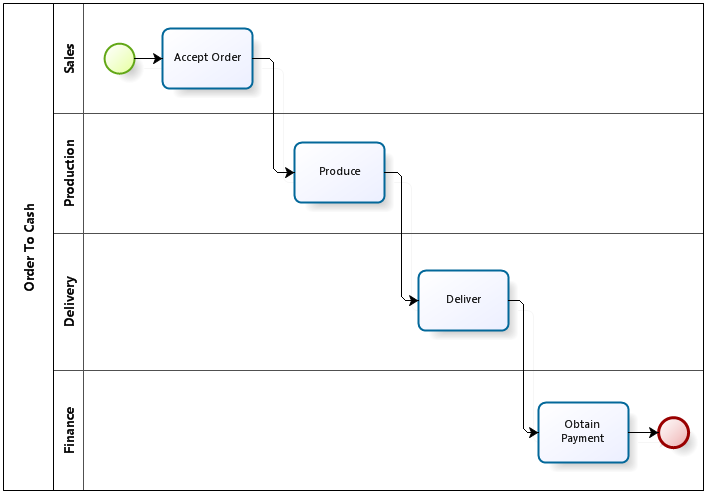

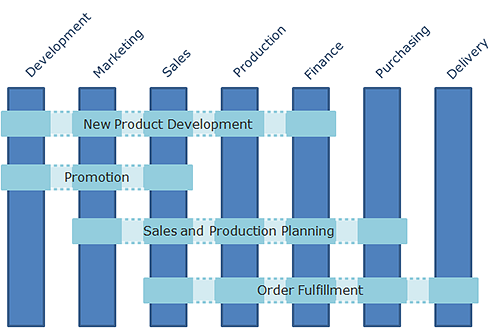

Cross-functional business processes are usually illustrated like this:

Fig. 1. Functions and cross-functional processes.

However the picture above produces a badly wrong idea of how to resolve issues located at the borders between departments. It leads to a vulgar idea of the business process as a simple sequence of steps: “do this - do that - proceed further - then stop.” Businesses does not work this way.

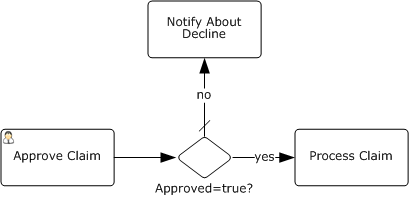

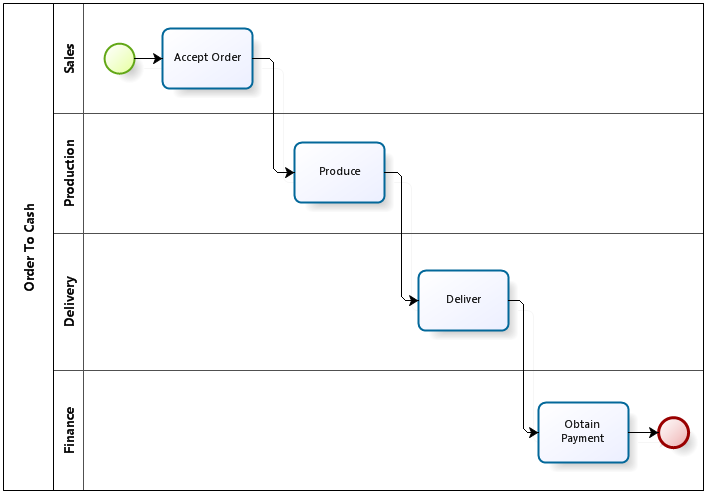

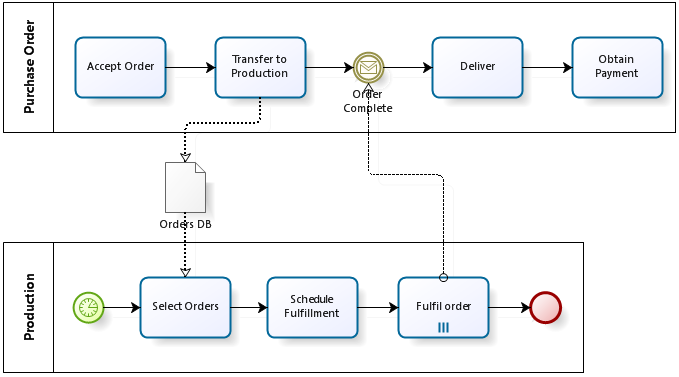

Let’s consider the “Order to Cash” process as an example. In case of production to order it’d contain the following steps: accept order - produce - deliver - obtain payment.

- Process begins when sales department receives a customer order.

- After processing the order sales transfers it to production.

- Production starts to fulfill the order.

- Manufactured goods are delivered to the customer.

- Finance department obtains the payment.

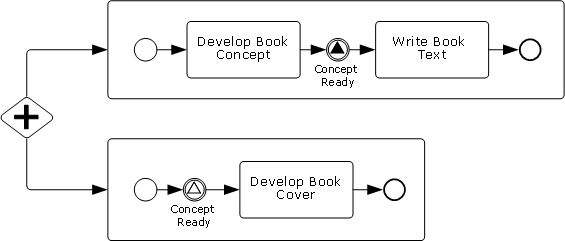

Fig. 2. «Order to Cash» cross-functional process, workflow version.

Imagine a manufacturing workshop being empty, dark and silent. Now the client’s order comes, the workshop manager switches the power on and everything starts running. Nosense? Sure. But the naive diagram above implies just this!

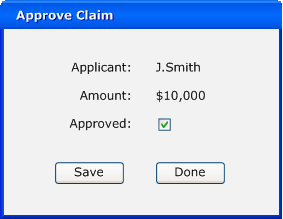

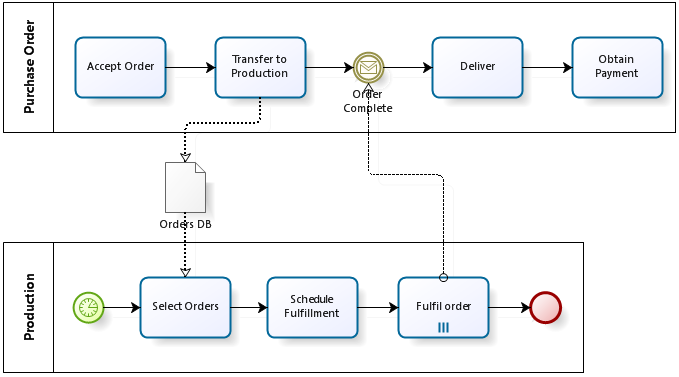

Now how it really works:

- Sales places customer’s order into production queue.

- Production planning starts periodically (e.g. daily), scans the orders queue and schedules production.

- Orders are processed one by one in accordance with the schedule and after each order is fulfilled the corresponding client order process is notified that the goods are ready for delivery.

Or graphically:

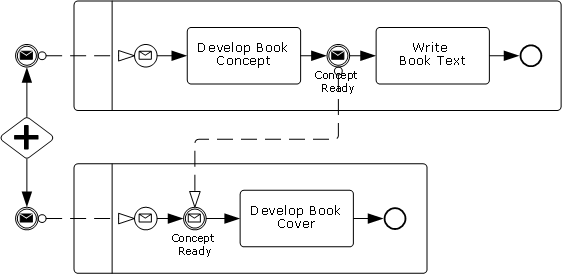

Fig. 3. «Order to Cash» cross-functional process, BPM version.

We’ve got two processes here communicating via data (the orders database) and messages (order execution notice). It’s fundamentally impossible to implement it within a single pool (single process) because the “Purchase Order” and “Production” have different triggers: receipt of an order from a client and timer, respectively.

Same story with delivery and payment: they can hardly be implemented within “Purchase Order” pool. So technically there would be even more than two processes (pools).

Workflow, BPM, and multithreaded programming

As the example above shows, cross-functional processes can’t be implemented with a simple workflow: the boundaries between busines units can’t be ignored because they different units operate at different rhythms and utilize different routines. These boundaries can’t be eliminated simply by depicting the flow of work from one unit to another as shown in Fig. 2.

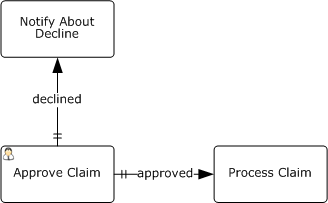

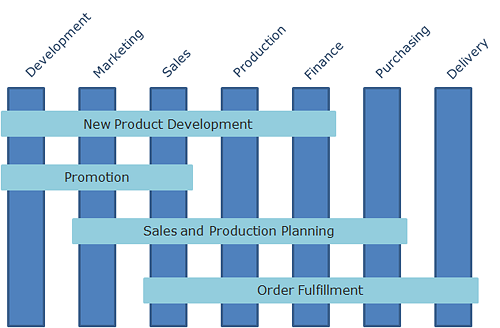

Technically, the cross-functional processes are implemented by inter-process patterns one of which is shown in Fig. 3. Getting back to the methodology, the picture shown in Fig. 1 should be drawn like this:

Fig. 4. Cross-functional process as a coordinator of functions.

The workflow only covers work within a single function. Once we go beyond it i.e once we aim at cross-functional processes and deal with handoffs between units, the interaction between workflows must be utilized.

Switching from workflow to inter-process communication means switching from single-threaded to multi-threaded programming.

Unfortunately in many cases it’s a tough barrier.

- Some people doesn’t see this barrier. They hit it but doesn’t realize what’s the problem really.

- Others instinctively bypass the barrier by implementing BPM pilot projects aiming at processes like “Vacation Request”. A pilot like this is going to be successful but does it have any value for business?

I believe this is the sources of most of the disappointment in BPM: those who narrow it down to the workflow end up with predictable failure.

Technically, multithreading is what distinguishes BPM from workflow. Remove the interaction between asynchronously executable processes via data, messages and signals and what you’ll get would be “workflow on steroids”, not BPM.

Unfortunately, this is the case with many software products marketed aggressively as BPMS. For me, the main BPMS criterion is the support of BPMN-style messages. There are other criteria indeed but this is the most useful at the moment. Everything else - graphical modeling, workflow engine, web portal, monitoring - is implemented ususally, better or worse, but many products totally miss inter-process communication. Most likely not because it’s that difficult but rather because no one has explained how important it is.

Yet saing “get used to the multithreaded programming of processes” is easier than following the advice. Complains about BPMN complexity are common: “who invented these damned 50 different BPMN events!”.

The name of complexity is business, not BPMN!

Whoever promises a simple solution to business issues, whether it’s BPM or something else - do not believe it. Business is a human competition by nature: smart people are competing for living better than others. Therefore business has been and will remain a complex matter.

The complexity of BPMN isn’t excessive, it’s adequate to the complexity of the business. Students of my BPMN training have no question about why there are so many events: no one is excessive. And by the way, note that BPMN 2.0 is practically no different from 1.x at workflow part - the standard evolves in supporting more sophisticated multithreaded programming: choreography, conversation.

The business can only be programmed as a multithreaded system.

BPM and the ACM

Here I deliberately step on the slippery ground because ACM (Advanced/Adaptive Case Management) fans may respond: “A-ha! We have always said that business can not be programmed!”

Maybe it can, maybe cannot … most likely, in some cases it’s possible but not in others.

They say the percentage of knowledge work vs. routine work is constantly growing. But exactly where is it growing? Mostly at US companies that offshore routine activities to Asia. A predictable observation for analysts located in US. But as soon as the amount of knowledge work grows at one place, the amount of routine work grows in another. And managing routine procedures running on the other side of the globe is the best task for BPM that one can imagine.

I would like to ask ACM enthuziasts that cricize BPM: are you sure you’re criticizing BPM and not wokflow? Aren’t the object of your criticism BPM projects either trying to solve business problems with workflow or having no business agenda at all?

If this is the case then the failure is quite predictable but it doesn’t mean that BPM points the wrong way, it just means the need to more thorough work.

ACM is a good thing indeed but only as an extension to BPM, not as a replacement. Besides ACM today is less mature than the BPM so those who make mistakes with BPM are likely to make even worse mistakes with ACM.

To be continued…

…with the major patterns of interprocess communication and a word of warining about the opposite extreme - excessive usage of interprocess communications. Stay tuned.